Ah, welcome back to Angular Space, where my articles describes the smooth of processing development pipelines and look at them go to perish (sometimes). In this thrilling third installment (yay! 3 articles!), let's dive into one of the most exquisite nightmares Angular devs are forced to endure from time to time—testing issues, specifically, the sheer agony of long test executions.

Motivations and goals

This article is purely conceptual and, spoiler alert, doesn't have much code. Instead, it dives into the beautiful disaster that is your test startup time, breaking down why your pull request turns into a tragic symphony of misery the moment it hits your CI pipeline. Think of it less as a tutorial and more as a support group for developers questioning their life choices while waiting for their tests to run.

Recommended reading

I would suggest to take a reading to Deep dive into Nx Affected. The reading can overview in some manner how a test might be running in an Nx workspace when things changes, which is perfect for this horror storytelling article.

Grab some popcorn, and water (I don't want to choke you).

Introduction

Prior to start, let's start with the setup to observe:

- Nx Monorepo (more likely 16, or anything modern if you want) – because managing a single project in a separate repo wasn't soul-crushing enough.

- Multiple Angular 16 applications – since leadership is too terrified to pull the trigger on a full migration to LTS... cowards...

- Internal libraries galore – the glue that keeps our UI components works as intended functioning across the workspace... A happy salad of code courtesy of your transcontinental team efforts.

- Third-party organizational UI dependencies – an unholy mix of Angular 14 and 16, because consistency is for the weak. Poorly architected. it works as intended for some miracle.

- Everything is beautifully written in TypeScript – or so we tell ourselves.

- Testing is handled by Jest – courtesy of Nx's "community support" since Nx 14, Jasmin was not fun.

and here we go...

It's 9:00 AM. You drag yourself to your desk. You turn on your PC. You sip your coffee from that “Official Angular Space” mug—except it is not an official one. No, because the Angular Space dude never sent one to you. So, in an act of sheer defiance, you took a permanent marker and made your own from that purple mug courtesy of some cellphone provider. Artistic genius.

Then, you open your inbox.

Emails flood in from your transcontinental teams. The build times are horrendous. They rage, weep, suffer. Dante's hell is small in comparison to this. Some common task on the pipeline, like nx affected -t test, should take mere seconds… but no. No. They take 15 to 30 minutes just to run some trivial tests.

You glance at your mug. The coffee inside has grown colder. It sits at the edge of your desk now, trembling under the weight of your impending despair.

You check the logs. Nothing. The Jenkins execution times glare back at you like a cruel joke. That cold feeling in your legs is not like you don't exercise, but the starting symptoms of panic (A feeling that by know you know very well).

DAMN THAT IS SLOW!

Tests—those seemingly innocent creatures—have somehow mutated into monstrous entities that take minutes just to wake up, only to sprint through execution in mere seconds. No errors, no warnings—just soul-draining startup times that make no sense.

And yes, even if you're using nx cloud, with your army of Nx agents working in parallel, some unlucky ones might still experience the delightful mystery of tests that crawl at an inexplicably slow pace for no apparent reason. Why should anything be predictable?

So, let's put on our detective hats and investigate:

Why the hell is a simple 3 case unit test taking five whole minutes (or even more) just to start?

How Nx does testing with Jest

By now, your coffee has gone cold, your colleagues are angrily DM'ing you about "Why are tests so slow?" and you're questioning why you became a developer in the first place.

Let's take a closer look at how Nx Framework handles testing with Jest to understand why time—and your coffee—slips away before the tests finish. It's a process that works but serves as a stark reminder of how fleeting and precious time really is.

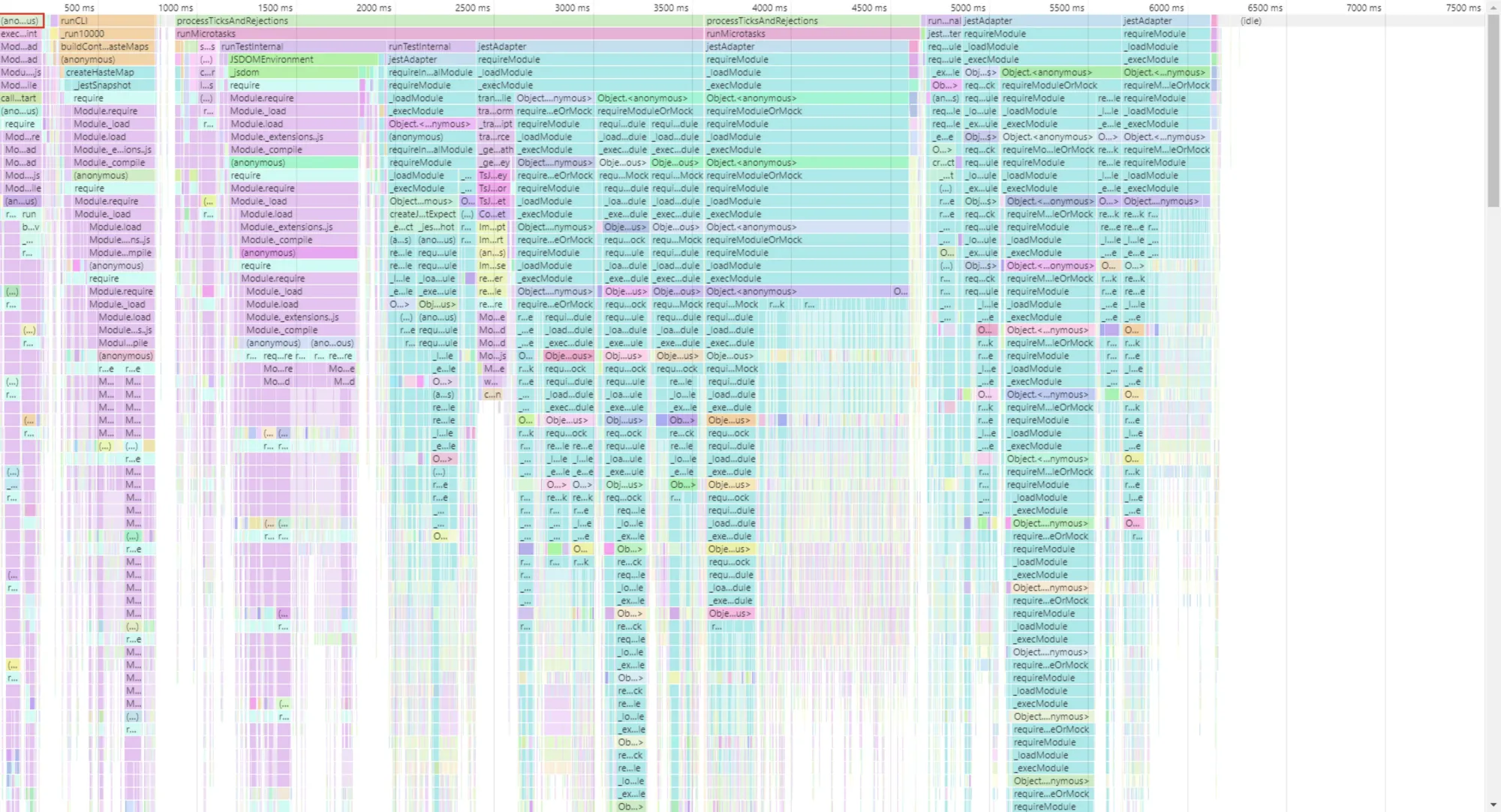

TD;DR : What Nx Does with Jest - Animated Version

Probably you're a visual learner, and of course I don't forget about how you interpret information. This chart was specifically designed to assist you on creating a mental map of the process in a general overview. It will easily point the process on how Nx and Jest work along on testing—and avoid in the process of reminding you whatever problem you're getting right now on your slow tests. Pick your poison.

Test Code Transpilation.

Now that you have decided to understand what is happening behind scenes, it will be a good reminder to pick those blue latex gloves, a surgical coat and a broom/dust pan combo from your kitchen to check what is happening one step at the time with your slow tests. After all, this is an autopsy, and not the scene of a crime.

When you run nx test in a library configured for testing with Jest (check project.json), Nx uses @nx/jest to run ts-jest on your tests on setup to transpile code. Because one round of transpilation isn't enough, Nx ensures that every time Jest runs, it has to transpile your TypeScript code to run the test. Once the test passes, the outcomes of the test are stored on the Nx Cache until you hit nx reset just to grace your day to rerun things from scratch (test and build targets tends to be cached when those run successfully... but not here). Forget the built code in the first CI pass that usually is nx affected -t build which usually is generated on the dist folder, because Jest insists on handling it itself. Why not, right? It's not like you had anything better to do with those extra minutes.

And what you do with those extra minutes? of course you could memory profile the test! For example, you could determine the actions prior to test, or during the test. While time passes on your profiling, Jest ends up in setting an spectacular graph figuring out stuff:

Workspace test preset

The Workspace Configuration Relies on a preset file which usually is jest.preset.js. This mystical file is the core configuration for your workspace, dictating how tests across separate apps and libraries should behave. It's like a set of laws written on stone tablets. Nothing divine about it or what it is written on it.

Jest Treats Each App/Library as an Island

Jest isolates apps and libraries during testing by design. When you set Jest as the test runner for an application or library, Nx doesn't just shrug and leave you hanging. Instead, it kindly prepares specific configurations, sprinkling magic dust (read: boilerplate) so everything "just works". It is rigid enough to make every test to play the same rules... but hey, on the bright side this ensures no cross-contamination between tests while in a darker side it adds a layer of overhead when working in a monorepo.

Your test-setup.ts and jest-preset-angular

The test-setup.ts file includes a reference to jest-preset-angular, which is a package that contains the baseline for running Angular-specific test which is plain and generic on Apps and libraries. Unless you want something quite specific (like defining some global configuration for a specific library/app), the test-setup.ts is a sad photocopy on each app/library that uses it.

The test Executor in project.json

Located under the test target in your project.json, the executor points to @nx/jest. It acts as the middleman, passing the workspace preset configuration and whatever additional parameters you throw at it. Think of it as a reluctant courier—you give it a package, and it delivers it (eventually).

The Executor Uses Jest to Transpile and Run Tests

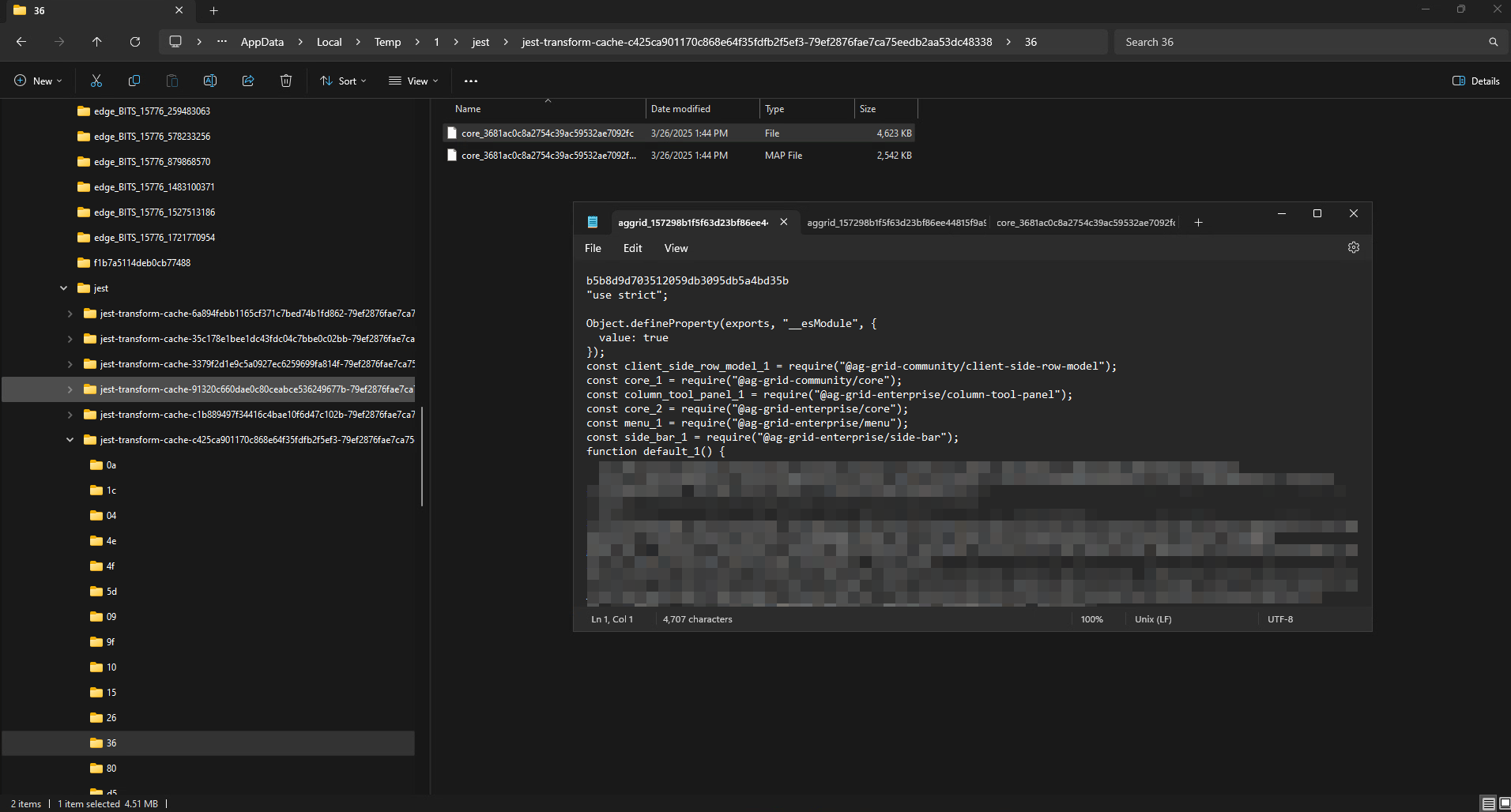

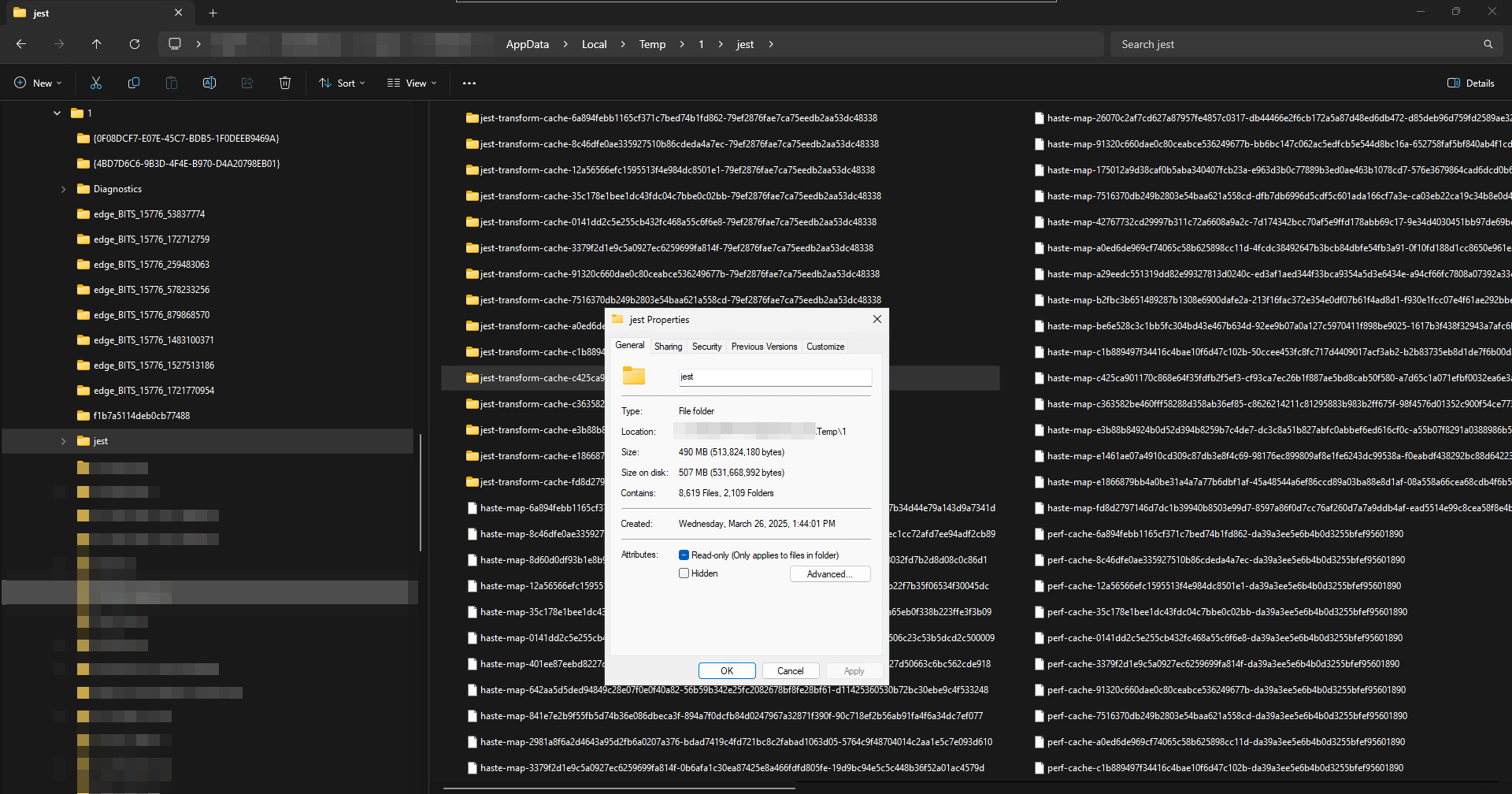

And here's the kicker: the executor calls Jest, which then transpiles your code AGAIN to run the tests. Because why settle for one round of transpilation when you can have two? It's the gift that keeps on giving (your CI pipeline gets an extra headache, and a round up of last minute on the architecture meeting call). As a result, if you dive good enough, it creates a /jest folder in your system tmp folder, where all the transpiled code will live.

Third-Party Dependencies and Modules

Now that we have a clear picture of how Nx, Jest, and Angular interact, let's talk about those monolithic third-party packages that somehow always find a way to make your life harder.

Remember those Angular 14 libraries we mentioned earlier? The ones clinging onto life in your workspace? Well, In this case, every single component (or sometimes hundreds of them) lives inside modules. And here's where things get interesting—or, depending on your mood, miserable.

See, in an attempt to "simplify" usage, some brilliant decisions lead to absurdly massive modules being passed around like a family heirloom. The problem? Each time a test runs, it needs to resolve the module and all of its components. Yes, every single time. If Jest doesn't cache it, grab some popcorn and enjoy the slow-motion train wreck of unnecessary resolutions.

ts-jest and Code Transpilation

When large modules are referenced in a test for a component without being passed or mocked, Jest doesn't just ignore them. No, it transpiles everything. And because we love torturing ourselves, let's verify what's happening:

Run this command at the root of your workspace:

node ./node_modules/.bin/jest --cleanCache

This will return the cache directory Jest uses. Keep an eye on it, literally. You will see.

Now, run your problematic test. Because if you suddenly see it growing like an unchecked memory leak, well, congratulations—you've found one of the many reasons your tests are moving at glacial speed.

Note: Did I Say Memory Leak? YES.

One of the main reasons a test exits without any reason in Jest is that the sheer amount of transpiled code becomes too much for it to handle. On CI world, Jest in its desperate attempt to process something it was too much to manage, eventually just gives up. No error message. No logs. No graceful failure.

Just… nothing.

It's like watching someone stare into the abyss—except, in this case, the abyss stares back, then quietly shuts down your test run without so much as an exit code.

One Test File. Three Test Cases. Five (or more) Minutes.

Now, let's address the elephant in the room which is more likely A potential memory leak.

Let's assume your component is purely presentational. No heavy logic. No complexity. Just an SSS component (Super Simple Stupid).

So why, in the name of everything sacred, does this test take 5 minutes to start?

Well, let's park the memory leak theory for a second. Upon further analysis, yeah, it could be the case. But there's another, even more frustrating possibility.

When ts-jest starts transpiling ALL THE CODE related to the component at test startup, you'll notice a tree of multiple files and folders forming around at great speed on THE FOLDER I TOLD YOU TO WATCH. It's like watching a horror movie where every new scene brings another terrible revelation.

Normally, you'd use transformIgnorePatterns on your Jest configuration to skip unnecessary files from being transformed. But in cases like these, the sheer number of files being processed is absurd. The deeper you dig, the more you uncover a sprawling, tangled mess of transpiled dependencies.

Suddenly, deep inside Jest's transpilation logs, you recognize some familiar faces on your codebase:

- Ag-Grid? Yep, you see some code for cell renderers, a familiar API implementation here and there, and recognizable functions you use for your grids. fantastic.

- Angular Material? Of course.

- Random three-line transpiled files that serve no visible purpose? You bet.

- And that component that somehow got clustered on the module of that UI library, but is not being used at all

The list of files being generated doesn't end for a test that small. And the worst part? The more dependencies Jest has to transpile from such modules, the bigger the memory load.

It is a three (3) case test for God's sake! and the amount of files generated on the transpilation seems not to stop. The clock keeps ticking, and your anxiety skyrockets, as the terminal doesn't show any visible sign that it ended the process to start doing something.

This is where things start breaking in CI. On nx affected -t test, you might not even get an exit error code—just a void. No output. No explanation. Nada. Some affected apps and libraries show results, but that problematic library or app responds with sheer silence in defiance to your hard planned work, as if Jest itself has given up on life.

It's like the old tale of the milkmaid—she carried too many jugs of milk, only to end up with everything shattered on the ground. Except here, it's your CI pipeline, and the spilled milk is your tears of fully justified frustration.

Now, lets isolate the failing app/library by first executing again

node ./node_modules/.bin/jest --cleanCache`

and

nx reset

(Why I would keep the cache of something failing?)

in this way, we should be able to track down the output of:

nx test that-f-library

If everything works fine, You would witness, in full terror:

- A 5-minute (or more) startup time for the test suite.

- A 3-test suite that executes and passes in mere milliseconds.

- and an absurd amount of transpiled files being generated on the jest cache

How do we fix this?

Ok, Time for a break. Step away. Breathe. Stare into the void. Question your life choices. Because clearly, something is very, very wrong.

The Real Question: Why Aren't We Testing Built Code?

Let's step back for a moment. By looking at the pipe line process, and observing this in a holistic manner, an obvious thought emerges...

If we already run on CI:

nx affected -t lint

and

nx affected -t build

... it effectively produces in a reasonable time fully transpiled, production-ready code right?

... so ...

Why to test the source TS code when we can test the built code instead?

Wouldn't that at least reduce some of this transpilation madness? Wouldn't it prevent Jest from processing dependencies it doesn't need to touch?

It's almost as if we're making this harder on ourselves for no reason. But let's dig deeper before assuming anything.

Barrel files???

Some folk told me back in the day about the benefits of barreling files as if it were an NgModule, which by now it is not the best choice to encapsulate and distribute components, services, etc. However, there are some folks that believe in the principle of encapsulation as their bible, and they place a significant amount of barrel files to "encapsulate" the distribution of everything you develop in a library, like the bible, it has an index of books, chapters, verses, etc. Something thick to read in your free time. Genius analogy.

Sadly, we are talking about Jest, and Jest like many other test runners are atheists doesn't keep a bookmark on barrel files like you would do when you read a big book. Instead it decides to cook itself into the references of each content of the imports of such barrel file, and despairs makes its entrance as Jest will crawl those barrel files searching for dependencies and transpile each one of those without mercy like a real Pokémon Master in an animal shelter.

And like that, Jest will check its Pokédex. Oh! Not having a transpiled Piggeon in your cache? catch it and transpile it. It was super effective.

Won't believe it? here some articles courtesy of some mysterious reviewer which name can't recall at the moment (anyways, you see the reviewers of this article at the bottom of this page. Refined gentlemen.)

- What We have been complaining: slow start times

- Stop! Won't somebody please think of the... tests?

- who asked for an extra cup of barrel files?

Speeding things up? or formula for disaster

On paper, testing pre-transpiled code sounds like a great idea. The code is modularized, optimized, and should, in theory, run faster. So, what if we just told Jest to use moduleNameMapper to resolve everything to the already-built output? Boom—problem solved, right?

Wrong.

It turns out, Jest wasn't really built with this in mind, and blindly pointing moduleNameMapper to transpiled code can backfire spectacularly. Instead of skipping unnecessary transformations, Jest might decide to take the scenic route, resolving modules AGAIN in a way that actually increases test execution time. That's right—what was meant to be a performance hack could turn into a slow-motion disaster.

So, before we set the entire testing pipeline on fire, let's consider what actually needs to be done:

- Keep running tests in local development using source files to ensure nothing explodes before deployment.

- Only in CI, build transpiled code once and test against it—this avoids redundant work and keeps Jest from going full meltdown mode.

- Get

moduleNameMapperright, or else. Misconfiguring it will have the opposite effect, forcing Jest to resolve and transpile dependencies in the most inefficient way possible. - Adjust

transformIgnorePatternswisely. Jest is notorious for deciding, on a whim, that something needs to be transpiled—even if you explicitly told it not to - Accept that Jest might not be the hero we thought it was. All that fanfare about speed and efficiency? Yeah, not when you're dealing with monorepo and large-scale Angular projects

So, does this approach actually speed things up? Potentially—if done flawlessly. If not, well... you might just end up watching your CI logs go blank as Jest spirals into an existential crisis. Your call.

But let's not fly too close to the sun and get burned out in the process. Which such a gamble approach (honestly, this approach is quite risky and should be out of question), it is better to re-evaluate our options. Definitively this might not be a good idea, and if you have read up to here, you and me would agree that we shouldn't take this route.

We software engineers are resilient beasts, ready to hunt our pray in many different ways. We can toss this idea to the trash and let's consider another approach: If something that is being tested is including a large set of pieces of code, we might have to focus on how to make those pieces small enough for our test to not choke under the sightless pressure, and that is mocking.

Mocking strategy

So, if Jest is dead set on making our lives miserable by transpiling half the world every time we run tests, the next best move is to trick it into doing less work. Enter mocking strategies, where we convince Jest that it doesn't really need to process those massive, bloated dependencies in full.

Here's how we fight back:

1. Mock Third-Party Libraries with moduleNameMapper (Properly This Time)

We've already established that moduleNameMapper can make things worse if misused, but if done right, we can redirect Jest away from unnecessary transpilation.

Instead of letting Jest resolve a UI library (like Ag-Grid or Angular Material) and transpile it from scratch, we can mock those libraries to return a lightweight stub. At global scope you can do something like this in your Jest configuration, so we address the biggest animals in the room first:

module.exports = {

moduleNameMapper: {

"^ag-grid-angular$": "<rootDir>/jest-mocks/ag-grid-mock.js",

"^@angular/material$": "<rootDir>/jest-mocks/material-mock.js",

},

};

Then we create mocking files like jest-mocks/ag-grid-mock.js

module.exports = {

AgGridAngular: jest.fn(() => ({

onGridReady: jest.fn(),

api: {

sizeColumnsToFit: jest.fn(),

},

})),

};

🎯 Why This Works you ask? : Jest will no longer waste time trying to transpile entire UI component libraries—instead, it gets a lightweight stub that satisfies imports but does nothing else.

This is nice to use, but if we were testing something specific, we might need to address it in the individual test file.

2. Use jest.mock() in Individual Tests

For dependencies that don't need to be loaded at all, we can simply mock them per test file instead of forcing Jest to deal with them. Assuming we need to mock a service, we can directly instantiate the name of the service and mock it.

jest.mock("src/app/services/heavy-service", () => ({

HeavyService: jest.fn(() => ({

fetchData: jest.fn().mockResolvedValue([]),

})),

}));

🎯 Why This Works: Jest doesn't try to import the actual service, reducing unnecessary module resolution.

It is nice that we can pinpoint to services, but what about doing something more selective? You want to address vulnerable points instead of taking the animal on the room in its enterity.

3. Mock Entire Modules Using jest.spyOn() (For Selective Mocking)

Sometimes, you want to use part of a module while preventing Jest from touching its heavy dependencies. Enter jest.spyOn(), which lets you hijack just the parts you need.

for example:

import * as HeavyModule from "src/utils/heavy-stuff";

jest.spyOn(HeavyModule, "computeSomething").mockImplementation(() => 42);

🎯 Why This Works: The rest of the module remains intact, but Jest doesn't need to evaluate computeSomething or its dependencies.

4. Use transformIgnorePatterns to Keep Jest Out of Trouble

If Jest insists on transpiling things it really shouldn't, tell it to back off using transformIgnorePatterns. For this to go right, you might want to get a refresher on Regex. The idea here is to ignore specific files or directories to be transformed, nothing else:

module.exports = {

transformIgnorePatterns: ["node_modules/(?!(lodash-es|date-fns)/)"],

};

in this case, transpile everything in whatever is imported in node_modules, except lodash-es and date-fns

5. Mocking Angular Modules (Stop Loading Everything)

One of the worst offenders in test performance is Jest trying to resolve entire Angular modules for every test. Instead of loading a full module, create a lightweight mock module:

Example (Mocking Angular Modules in Jest)

import { NgModule } from "@angular/core";

@NgModule({

providers: [

{

provide: HeavyService,

useValue: { fetchData: jest.fn().mockResolvedValue([]) },

},

],

})

export class MockModule {}

Then, in your test:

TestBed.configureTestingModule({

imports: [MockModule],

});

🎯 Why This Works: Jest skips loading the entire real module, reducing initialization times and dependency resolution overhead.

ngMocks

Mocking is essential for maintaining some level of sanity in testing, keeping our CI pipeline from spiraling into a black hole of unnecessary computations. However, the real tragedy begins when libraries, services, or components change—because then, we have to remock everything. And let's be honest, nobody reads breaking change logs before installing updates.

This is where NgMocks is really effective: It automates the nightmare of manually maintaining mocks, transforming existing components, services, and modules into mock counterparts—saving us from our incompetence on mocking something properly most of the times.

NgMocks is so extensive, I could probably write another article about it… but since I'm enjoying my well-deserved OOO time, I'll leave that task for another day. Instead, I'll direct you to this page so you can educate yourself and combine NgMocks with the horrors—I mean, knowledge—you've gained through this narrative. Trust me, it's worth your attention.

Conclusion

There are many reasons why tests slow down in CI. One of the biggest culprits? Not keeping effective mocking strategies that result in a successful transpilation of test artifacts that can be easily cached. If you don't store artifacts, you might as well close your eyes, forget you read this article, wander around the internet looking for solutions, and end up here again—experiencing a strange sense of déjà vu.

Keeping artifacts isn't just a convenience—it's the way to ensure your test suites receive the performance boost they desperately need. And since Nx Cloud was our tool of choice in this article, using a private cloud instance could provide even greater improvements for large codebases, ensuring faster test runs that don't feel like a medieval torture method.

Now that I've finally woken up from this fever dream, I can return to my desk, sip my coffee, and check that my test artifacts are intact and working.

…Until the next nightmare begins.

Thank you for reading. I hope this stirs up some deeply buried memories you'd rather not revisit—but hey, it's always good to keep an eye on the abyss, just in case.